#注意:所有K8S集群,都要先启动网络代理终端机器,再启动部署K8S集群的节点机器!

设置科学上网代理

先参考:"(科学上网)LINUX终端Clash连接[无GUI]"

#设置每个节点的host配置

[root@node-X ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain

172.16.1.21 node-1

172.16.1.22 node-2

172.16.1.23 node-3

172.16.1.100 kxsw-1

[root@node-X ~]# cat /etc/profile

export http_proxy=http://kxsw-1:7890

export https_proxy=http://kxsw-1:7890

export no_proxy="localhost, 127.0.0.1"

[root@node-X ~]# source /etc/profile

#每个节点测试(正常显示和响应即可)

[root@node-X ~]# curl google.com

<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">

<TITLE>301 Moved</TITLE></HEAD><BODY>

<H1>301 Moved</H1>

The document has moved

<A HREF="http://www.google.com/">here</A>.

</BODY></HTML>

[root@node-X ~]# curl twitter.com

[root@node-X ~]# curl www.baidu.com

#只在node-1节点执行

[root@node-1 ~]# cd kubespray-2.15.0/

#设置网络代理

[root@node-1 kubespray-2.15.0]# vim inventory/mycluster/group_vars/all/all.yml

http_proxy: "http://kxsw-1:7890"

https_proxy: "http://kxsw-1:7890"

一键安装

#一键安装开始(只在node-1执行)

[root@node-1 kubespray-2.15.0]# ansible-playbook -i inventory/mycluster/hosts.yaml -b cluster.yml -vvvv

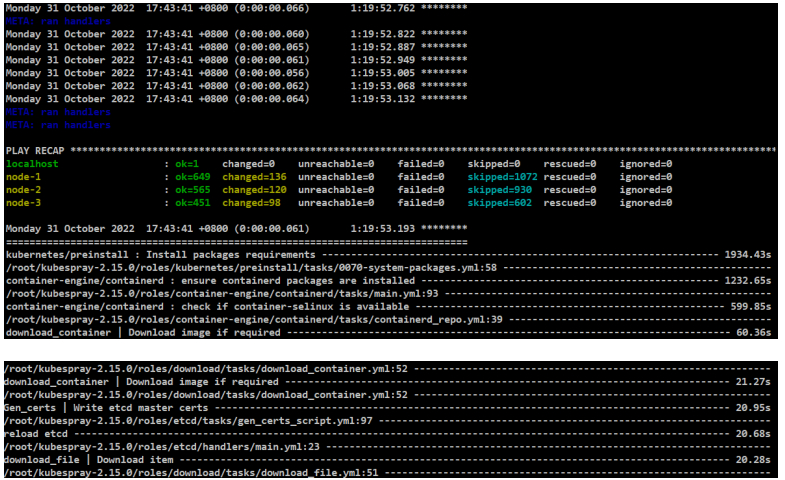

自己安装成功显示(时间很长,看下面时间大约1小时19分钟,关键还是看网速)

过程比较慢,因为包括了镜像和各种二进制文件的下载,特别是当节点比较多的时候,会在每一个节点上都完成一次下载,这个时间会非常长的。

#一键安装完成后,可能每个节点的/etc/hosts就变样了,不过没关系。

[root@node-X ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain

172.16.1.21 node-1

172.16.1.22 node-2

172.16.1.23 node-3

172.16.1.100 kxsw-1

# Ansible inventory hosts BEGIN

172.16.1.21 node-1.cluster.local node-1

172.16.1.22 node-2.cluster.local node-2

172.16.1.23 node-3.cluster.local node-3

# Ansible inventory hosts END

::1 localhost6 localhost6.localdomain

校验命令

#集群安装完成后,看下这个集群是什么样的,完全自动化部署的,现在并不知道这个集群有什么组件等。

#一键安装完集群之后,核实每个节点的crictl命令是否都可用

[root@node-X ~]# crictl

#查看namespace

[root@node-1 kubespray-2.15.0]# kubectl get ns

NAME STATUS AGE

default Active 64m

ingress-nginx Active 61m

kube-node-lease Active 64m

kube-public Active 64m

kube-system Active 64m

#查看每个namespace有哪些资源,这里的default默认的。

[root@node-1 kubespray-2.15.0]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

#kube_service。IP属于:10.200.0.0/16。

service/kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 64m

[root@node-1 kubespray-2.15.0]# kubectl get all -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 65m

#查看ingress-nginx。3个POD,1个daemonset。在每个节点都运行了一个ingress-nginx。

[root@node-1 kubespray-2.15.0]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-controller-bp25v 1/1 Running 0 73m

pod/ingress-nginx-controller-wndln 1/1 Running 0 73m

pod/ingress-nginx-controller-wrhbf 1/1 Running 0 73m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 3 3 3 3 3 kubernetes.io/os=linux 73m

#查看其它的namespace。里面大概的资源。

[root@node-1 kubespray-2.15.0]# kubectl get all -n kube-node-lease

No resources found in kube-node-lease namespace.

[root@node-1 kubespray-2.15.0]# kubectl get all -n kube-public

No resources found in kube-public namespace.

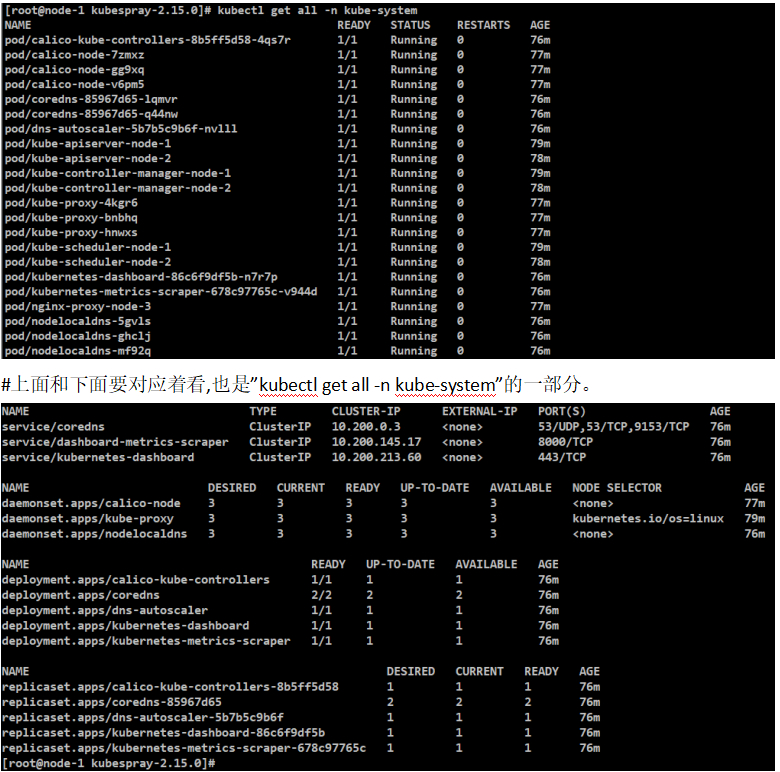

#kube-system相关的可以看到每个节点安装了哪些组件。安装数量,一个节点,有两个节点的,有三个节点的。

#比如显示node-1和node-2表示只有主节点。显示三个的表示,主节点和工作节点都有。

[root@node-1 kubespray-2.15.0]# kubectl get all -n kube-system

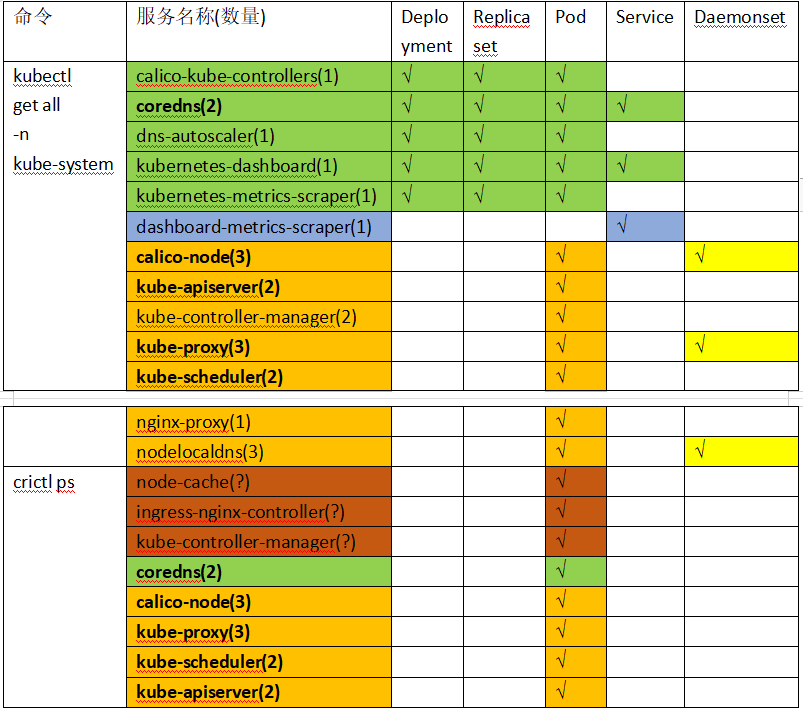

#这里整理一下这两张图片的对应关系。

#颜色相同代表两个命令执行后显示的一样的服务对象。

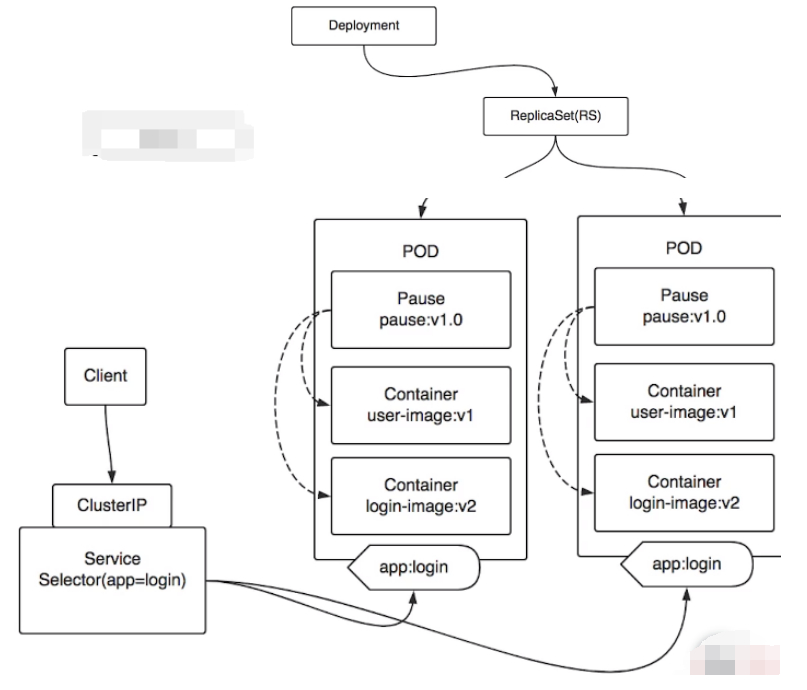

#忘记了上述服务对象,可回忆一下2-2中的图。

案例:nginx-proxy

#举个例子,可以发现nginx-proxy-node-3是个K8S调度起来的POD,并不属于service、deployment、replicaset。这里就在node-3(worker工作节点)节点看下下述的配置文件。K8S自动去加载的初始化的配置文件中查看。

[root@node-3 ~]# cat /etc/kubernetes/manifests/nginx-proxy.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx-proxy

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: kube-nginx

......

spec:

containers:

#镜像是nginx

- name: nginx-proxy

image: docker.io/library/nginx:1.19

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 25m

memory: 32M

......

#挂载,查看配置文件

volumes:

- name: etc-nginx

hostPath:

path: /etc/nginx

#查看挂载配置文件

[root@node-3 ~]# cat /etc/nginx/nginx.conf

stream {

#两个主节点地址。用本机去代理这两个6443端口。APISERVER的高可用。

upstream kube_apiserver {

least_conn;

server 172.16.1.21:6443;

server 172.16.1.22:6443;

}

server {

listen 127.0.0.1:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

http {

......

server {

listen 8081;

location /healthz {

access_log off;

return 200;

}

location /stub_status {

stub_status on;

access_log off;

}

}

}

#比如做的其他业务集群,很多时候APISERVER用Keepalive做高可用,用虚拟IP在worker节点用虚拟IP去连APISERVER。(这里的APISERVER看成nginx)

但这里的方案不同,是在每个节点上都启动一个代理6443,负载均衡到真实的APISERVER的地址。既实现了高可用,也实现了负载均衡。

#因为在这个集群中,只有node-3是单纯的worker工作节点,其他两个节点都是master主节点,这两个master主节点都启动6443端口了,所以就不会再启动nginx。

手动下载镜像(可选)

#注意:当发现安装集群过程中,containerd安装完成,containerd服务正常,那么可以手工下载这些镜像,但要注意,可能有些镜像中途会莫名的卡住导致安装时间超长,那么可以在不同节点,手工导入和导出操作。

#这个镜像可能会一直卡住。可以在其他能下载下载镜像的机器导出镜像,然后再来导入镜像。

#registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2

#发现gluster-01不能下载下来镜像。

#手工把镜像导出和导入

#ctr -n k8s.io和crictl的镜像是通用的。

#打包的文件不要以":"冒号相隔,不然在SFTP和SCP等远程处理时,可能会识别错误文件或hostname。

#比如

[root@gluster-02 ~]# ctr -n k8s.io i export ingress-nginx_controller-v0.41.2.tar.gz registry.cn-hangzhou.aliyuncs.com/kubernetes-ku

[root@gluster-01 ~]# ctr -n k8s.io i import ingress-nginx_controller-v0.41.2.tar.gz

#手动下载镜像(可选)。把镜像都打成Google的tag。

#kubespray-v2.15.0-images.sh这个脚本内容看本地磁盘。

#重要:此操作需要确保上面的"一键部署"执行后,并成功安装了containerd后即可手动下载镜像)。也是个再次核实的操作。

#注意这是在根目录执行的。我这里执行了。

[root@node-X ~]#

curl https://gitee.com/pa/pub-doc/raw/master/kubespray-v2.15.0-images.sh|bash -x

脚本kubespray-v2.15.0-images.sh中的内容如下:

#!/bin/bash

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6 docker.io/kubernetesui/metrics-scraper:v1.0.6

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19 docker.io/library/nginx:1.19

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0 k8s.gcr.io/dns/k8s-dns-node-cache:1.16.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2 k8s.gcr.io/ingress-nginx/controller:v0.41.2

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7 k8s.gcr.io/kube-apiserver:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7 k8s.gcr.io/kube-controller-manager:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7 k8s.gcr.io/kube-proxy:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7 k8s.gcr.io/kube-scheduler:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2 k8s.gcr.io/pause:3.2

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3 k8s.gcr.io/pause:3.3

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0 docker.io/kubernetesui/dashboard-amd64:v2.1.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3 k8s.gcr.io/cpa/cluster-proportional-autoscaler-amd64:1.8.3

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5 quay.io/calico/cni:v3.16.5

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5 quay.io/calico/kube-controllers:v3.16.5

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5 quay.io/calico/node:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5

标题:Kubernetes(四)kubespray方式(4.3)用kubespray一键部署生产级k8s集群

作者:yazong

地址:https://blog.llyweb.com/articles/2022/11/01/1667236499846.html