#控制平面就是K8S的master节点所需要的组件,要保证每个组件的高可用,所以每个组件至少要部署两个实例。

#这部分我们部署kubernetes的控制平面,每个组件有多个点保证高可用。

#实例中我们在两个节点上部署 API Server、Scheduler 和 Controller Manager。

#当然你也可以按照教程部署三个节点的高可用,操作都是一致的。

#下面的所有命令都是运行在每个master节点的,我们的实例中是 node-1 和 node-2

组件:配置API Server

#节点执行目录

[root@node-1 ~]# pwd

/root

[root@node-1/2 ~]# cd ~

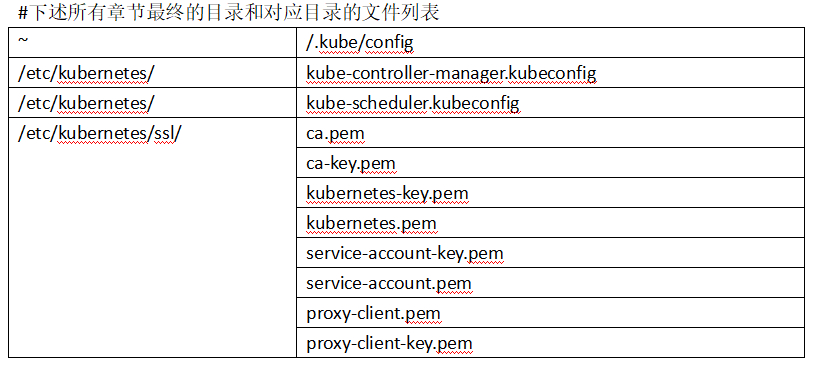

# 创建kubernetes必要目录 创建存放K8S的证书目录

[root@node-1/2 ~]# mkdir -p /etc/kubernetes/ssl

# 准备证书文件 把K8S需要的证书都移动到这个目录中

# 由mv命令改成cp命令

[root@node-1/2 ~]# cp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

proxy-client.pem proxy-client-key.pem \

/etc/kubernetes/ssl

[root@node-1/2 ~]# ll /etc/kubernetes/ssl

total 32

-rw------- 1 root root 1679 Nov 4 22:14 ca-key.pem

-rw-r--r-- 1 root root 1367 Nov 4 22:14 ca.pem

-rw------- 1 root root 1679 Nov 4 22:14 kubernetes-key.pem

-rw-r--r-- 1 root root 1647 Nov 4 22:14 kubernetes.pem

-rw------- 1 root root 1675 Nov 4 22:14 proxy-client-key.pem

-rw-r--r-- 1 root root 1399 Nov 4 22:14 proxy-client.pem

-rw------- 1 root root 1675 Nov 4 22:14 service-account-key.pem

-rw-r--r-- 1 root root 1407 Nov 4 22:14 service-account.pem

# 配置kube-apiserver.service

# 各个节点分别设置本机内网ip

[root@node-1 ~]# IP=172.16.1.21

[root@node-1 ~]# echo $IP

172.16.1.21

[root@node-2 ~]# IP=172.16.1.22

[root@node-2 ~]# echo $IP

172.16.1.22

# apiserver实例数

[root@node-1/2 ~]# APISERVER_COUNT=2

# etcd节点列表

[root@node-1/2 ~]# ETCD_ENDPOINTS=(172.16.1.21 172.16.1.22 172.16.1.23)

# 创建 apiserver service(apiserver服务的配置文件)

#可以发现apiserver的启动项特别多,暂时不要特别关注。

[root@node-1/2 ~]# cat <<EOF > /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${IP} \\

--allow-privileged=true \\

--apiserver-count=${APISERVER_COUNT} \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \\

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \\

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \\

--etcd-servers=https://${ETCD_ENDPOINTS[0]}:2379,https://${ETCD_ENDPOINTS[1]}:2379,https://${ETCD_ENDPOINTS[2]}:2379 \\

--event-ttl=1h \\

--kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \\

--kubelet-client-certificate=/etc/kubernetes/ssl/kubernetes.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/kubernetes-key.pem \\

--service-account-issuer=api \\

--service-account-key-file=/etc/kubernetes/ssl/service-account.pem \\

--service-account-signing-key-file=/etc/kubernetes/ssl/service-account-key.pem \\

--api-audiences=api,vault,factors \\

#K8S集群中的service的虚拟IP的地址段,内网中没有冲突可以。这里跟第四章的一致。service和pod网段一般分开。

--service-cluster-ip-range=10.233.0.0/16 \\

--service-node-port-range=30000-32767 \\

--proxy-client-cert-file=/etc/kubernetes/ssl/proxy-client.pem \\

--proxy-client-key-file=/etc/kubernetes/ssl/proxy-client-key.pem \\

--runtime-config=api/all=true \\

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\

--v=1

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

[root@node-1/2 ~]# ll /etc/systemd/system/kube-apiserver.service

-rw-r--r-- 1 root root 2006 Nov 4 22:33 /etc/systemd/system/kube-apiserver.service

[root@node-1/2 ~]# cat /etc/systemd/system/kube-apiserver.service

组件:配置kube-controller-manager

[root@node-1/2 ~]# pwd

/root

[root@node-1/2 ~]# mkdir -p /etc/kubernetes/

# 准备kubeconfig配置文件

[root@node-1/2 ~]# ll kube-controller-manager.kubeconfig

-rw------- 1 root root 6401 Jan 3 16:16 kube-controller-manager.kubeconfig

[root@node-1/2 ~]# cp kube-controller-manager.kubeconfig /etc/kubernetes/

[root@node-1/2 ~]# ll /etc/kubernetes/kube-controller-manager.kubeconfig

-rw------- 1 root root 6401 Nov 4 22:42 /etc/kubernetes/kube-controller-manager.kubeconfig

# 创建 kube-controller-manager.service

[root@node-1/2 ~]# cat <<EOF > /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--bind-address=0.0.0.0 \\

#K8S集群中的POD的cidr的虚拟IP的地址段,内网中没有冲突可以。这里跟第四章的一致。service和pod网段一般分开。

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

#kube-controller-manager负责颁发K8S的证书,这是给K8S的证书过期时间很长了,一旦给K8S授权之后就不会过期了。

--cluster-signing-duration=876000h0m0s \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/service-account-key.pem \\

#K8S集群中的service的虚拟IP的地址段,内网中没有冲突可以。这里跟第四章的一致。service和pod网段一般分开。

--service-cluster-ip-range=10.233.0.0/16 \\

--use-service-account-credentials=true \\

--v=1

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

[root@node-1/2 ~]# ll /etc/systemd/system/kube-controller-manager.service

-rw-r--r-- 1 root root 807 Nov 4 22:43 /etc/systemd/system/kube-controller-manager.service

[root@node-1/2 ~]# cat /etc/systemd/system/kube-controller-manager.service

组件:配置kube-scheduler

[root@node-1/2 ~]# pwd

/root

# 准备kubeconfig配置文件

[root@node-1/2 ~]# ll kube-scheduler.kubeconfig

-rw------- 1 root root 6347 Nov 3 21:20 kube-scheduler.kubeconfig

[root@node-1/2 ~]# cp kube-scheduler.kubeconfig /etc/kubernetes

[root@node-1/2 ~]# ll /etc/kubernetes/kube-scheduler.kubeconfig

-rw------- 1 root root 6347 Nov 4 22:48 /etc/kubernetes/kube-scheduler.kubeconfig

# 创建 scheduler service 文件

[root@node-1/2 ~]# cat <<EOF > /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--bind-address=0.0.0.0 \\

--port=0 \\

--v=1

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

[root@node-1/2 ~]# ll /etc/systemd/system/kube-scheduler.service

-rw-r--r-- 1 root root 496 Nov 4 22:49 /etc/systemd/system/kube-scheduler.service

[root@node-1/2 ~]# cat /etc/systemd/system/kube-scheduler.service

启动服务

[root@node-1/2 ~]# systemctl daemon-reload

[root@node-1/2 ~]# systemctl enable kube-apiserver

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /etc/systemd/system/kube-apiserver.service.

[root@node-1/2 ~]# systemctl enable kube-controller-manager

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /etc/systemd/system/kube-controller-manager.service.

[root@node-1/2 ~]# systemctl enable kube-scheduler

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /etc/systemd/system/kube-scheduler.service.

[root@node-1/2 ~]# systemctl restart kube-apiserver

[root@node-1/2 ~]# systemctl restart kube-controller-manager

[root@node-1/2 ~]# systemctl restart kube-scheduler

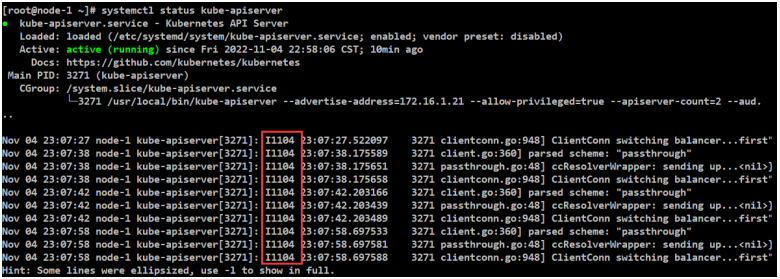

[root@node-1/2 ~]# systemctl status kube-apiserver

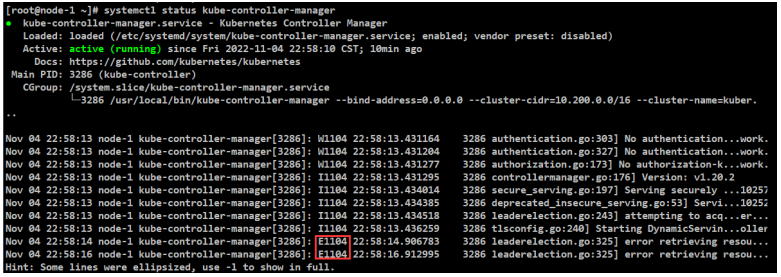

[root@node-1/2 ~]# systemctl status kube-controller-manager

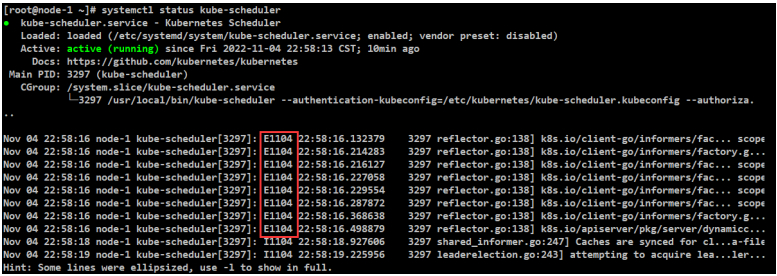

[root@node-1/2 ~]# systemctl status kube-scheduler

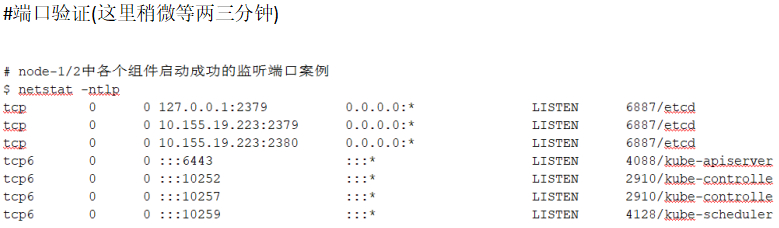

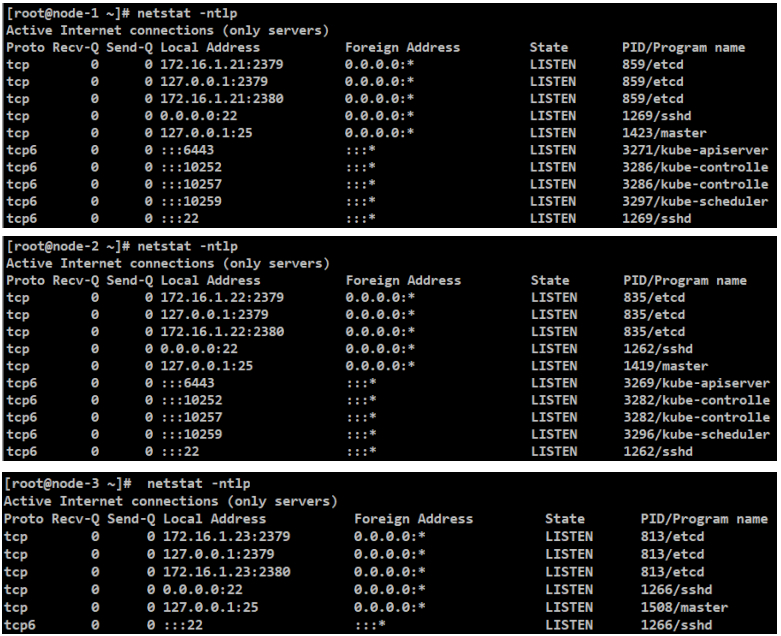

服务验证

[root@node-1/2/3 ~]# netstat -ntlp

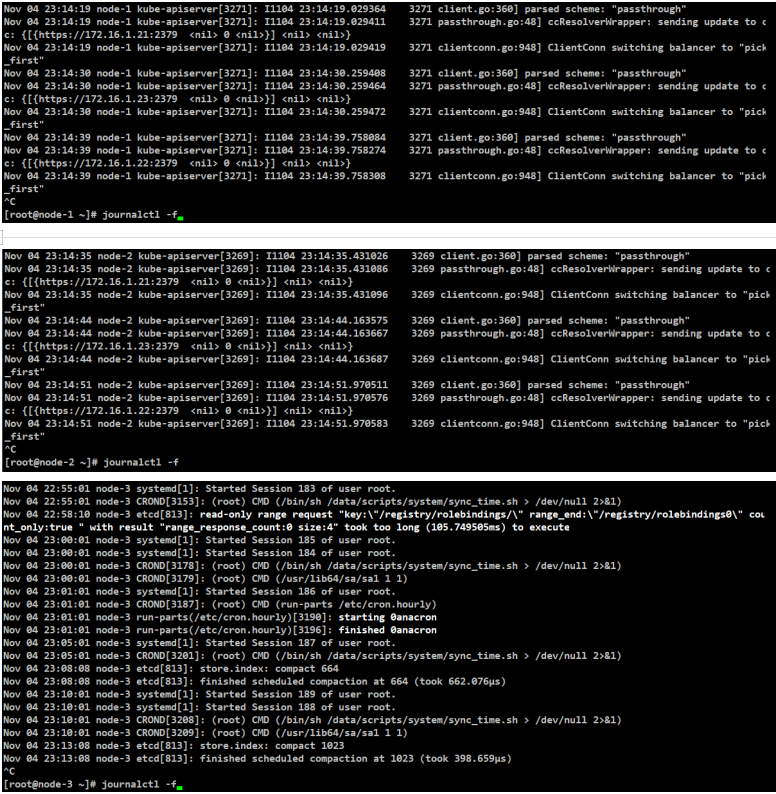

日志验证

#查看系统日志是否有组件的错误日志

[root@node-1/2/3 ~]# journalctl -f

组件:配置kubectl

#kubectl是用来管理kubernetes集群的客户端工具,前面我们已经下载到了所有的master节点。下面我们来配置这个工具,让它可以使用。

[root@node-1/2 ~]# cd ~

# 创建kubectl的配置目录(会默认的读取这个目录下的配置文件,因为也需要跟API SERVER打交道,所以也需要配置文件。)

[root@node-1/2 ~]# mkdir ~/.kube/

[root@node-1/2 ~]# ll -ld ~/.kube/

drwxr-xr-x 2 root root 4096 Nov 4 23:44 /root/.kube/

# 把管理员的这个事先生成的admin.kubeconfig配置文件移动到kubectl的默认目录

[root@node-1/2 ~]# ll ~/admin.kubeconfig

-rw------- 1 root root 6275 Jan 3 16:16 /root/admin.kubeconfig

[root@node-1/2 ~]# cp ~/admin.kubeconfig ~/.kube/config

[root@node-1/2 ~]# ll ~/.kube/config

-rw------- 1 root root 6275 Nov 4 23:45 /root/.kube/config

# 测试(其实在node-1或node-2都可以配置~/.kube/config此文件,在哪个节点配置就可以在哪个节点执行kubectl get nodes,来管理集群了。)

[root@node-1/2 ~]# kubectl get nodes

--说明这个命令可以执行了,与API SERVER完成了一次通讯。这样此工具就OK,可以使用了。现在还没有worker节点过来,所以还没显示出来。

No resources found

#这里去创建clusterrolebinding

#执行clusterrolebinding,做一个角色的绑定。

#因为在使用kubectl,像在执行 kubectl exec、run、logs 等命令时,apiserver 会把请求转发到 kubelet组件。

#所以这里定义 RBAC 规则,授权 apiserver 可以去调用 kubelet的API。

#集群的角色是"--clusterrole=system:kubelet-api-admin",是系统预先定义好的角色。

#用户--user是kubernetes,是事先配置证书的名字。

[root@node-1 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

#这个只需在一个节点创建,否则另外节点会创建失败。

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver:kubelet-apis created

[root@node-2 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

error: failed to create clusterrolebinding: clusterrolebindings.rbac.authorization.k8s.io "kube-apiserver:kubelet-apis" already exists

[root@node-1/2 ~]# kubectl get nodes

No resources found

[root@node-1/2 ~]# kubectl get all -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 49m

[root@node-1/2 ~]# kubectl get all -n kube-system

No resources found in kube-system namespace.

标题:Kubernetes(五)kubernetes-the-hard-way方式(5.5)部署kubernetes控制平面

作者:yazong

地址:https://blog.llyweb.com/articles/2022/11/04/1667577376395.html