如何在业务中真正使用ingress?

Deployment去管理ingress-controller是否真的合适?节点变化控制是否真的方便呢?

服务对外提供的是TCP的服务,而不是http的服务,该怎么去做服务发现呢?

调整nginx的配置,比如超时、buffer size等,nginx定制的配置,如何来设置?

涉及https的服务,如何配置数字证书?

访问控制,WEB服务,session保持如何做?能否做小流量?如何做A/B测试?

[root@node-1 deep-in-kubernetes]# mkdir 8-ingress

[root@node-1 deep-in-kubernetes]# cd 8-ingress

[root@node-1 8-ingress]#

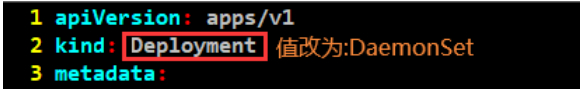

问题1:用daemonset运行ingress-controller

#首先要解决部署方式,之前是使用deployment去运行的,现在改为daemonset运行。

#这种业务可以考虑使用daemonset,这样的好处就是不用管实例数了,要在运行的机器打个标签就会自动跑起来,当使用deployment的时候,每次增加或减少的时候,就要去调整deployment的replicas副本数,虽然也没问题,但是用起来还是不顺畅。

[root@node-1 8-ingress]# kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

default-http-backend 1/1 1 1 13d

nginx-ingress-controller 1/1 1 1 13d

[root@node-1 8-ingress]# kubectl get deploy -n ingress-nginx nginx-ingress-controller -o yaml

apiVersion: apps/v1

#类型为deployment

kind: Deployment

......

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

............

manager: kube-controller-manager

............

name: nginx-ingress-controller

namespace: ingress-nginx

......

name: nginx-ingress-controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

......

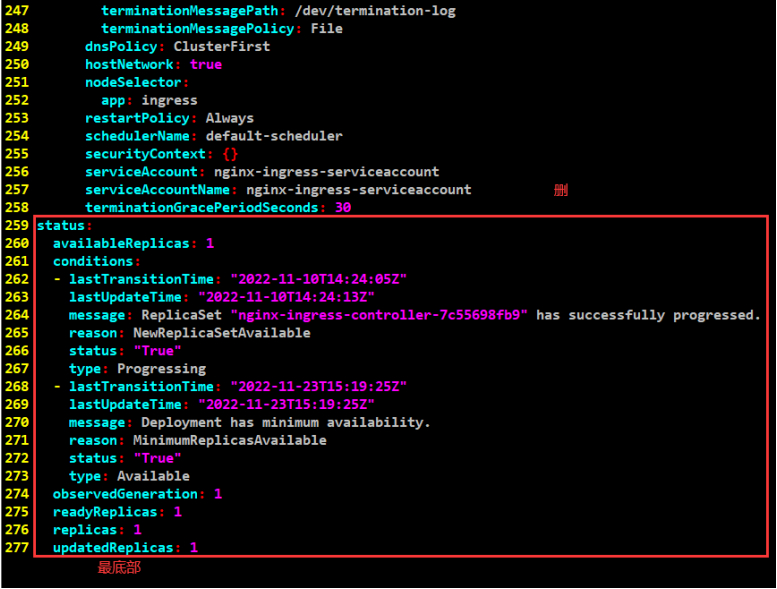

dnsPolicy: ClusterFirst

#网络模式是host模式,每个节点只能运行一个实例。

hostNetwork: true

nodeSelector:

#nodeSelector为ingress

app: ingress

restartPolicy: Always

......

status:

availableReplicas: 1

......

observedGeneration: 1

readyReplicas: 1

replicas: 1

updatedReplicas: 1

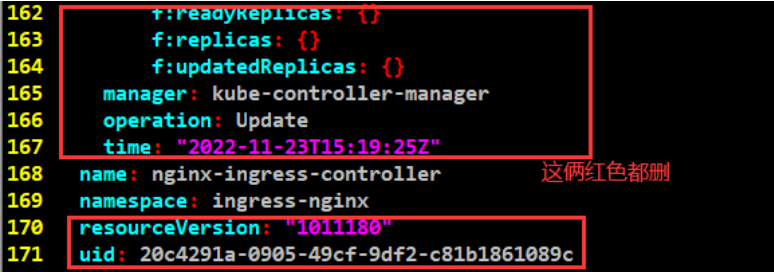

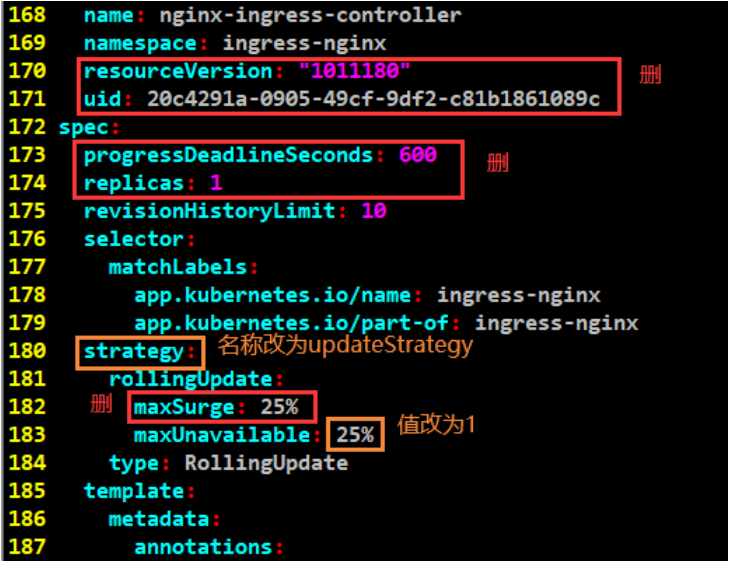

#下述先标记,最后再统一删除,要不然行号乱了。

#生成nginx-ingress-controller.yaml

[root@node-1 8-ingress]# kubectl get deploy -n ingress-nginx nginx-ingress-controller -o yaml > nginx-ingress-controller.yaml

[root@node-1 8-ingress]# cp nginx-ingress-controller.yaml nginx-ingress-controller-modify.yaml

[root@node-1 8-ingress]# vim nginx-ingress-controller-modify.yaml

中间的不截图,直接从29行一直删到167行。

#下述“maxUnavailable: 25%”参数:每次停掉一个,更新一个。至于这个超出的,因为是daemonset,所以不可能有再多的节点去超出了,所以这个值没什么意义。

#下述“replicas: 1”参数:在daemonset不支持这个配置。

#下述”progressDeadlineSeconds: 600”参数:deployment在部署replicaset的时候,当进程卡住没能往下进行的时候,并且超过了这个时间就会记下一个错误。daemonset不支持这个配置。

#最终的配置

[root@node-1 8-ingress]# cat nginx-ingress-controller-modify.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

creationTimestamp: null

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

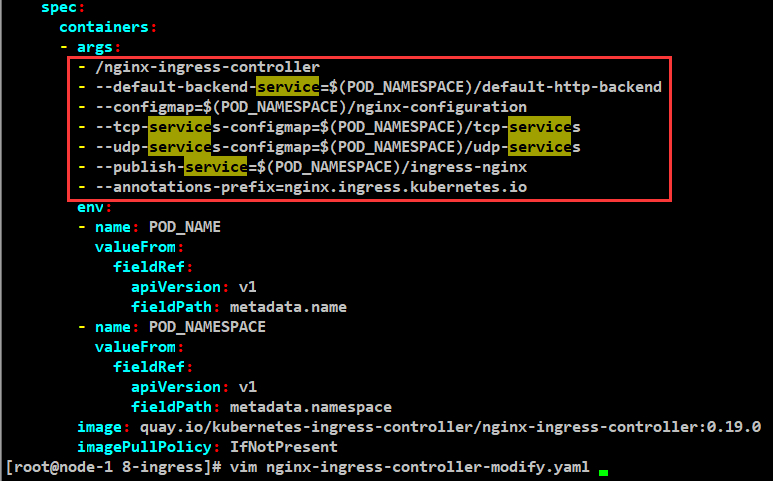

containers:

- args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.19.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: nginx-ingress-controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

securityContext:

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 33

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

app: ingress

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: nginx-ingress-serviceaccount

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 30

#改动完成。

[root@node-1 8-ingress]# kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

default-http-backend 1/1 1 1 13d

nginx-ingress-controller 1/1 1 1 13d

[root@node-1 8-ingress]# kubectl get deploy -n ingress-nginx nginx-ingress-controller

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-ingress-controller 1/1 1 1 13d

[root@node-1 8-ingress]# kubectl delete deploy -n ingress-nginx nginx-ingress-controller

deployment.apps "nginx-ingress-controller" deleted

#执行

[root@node-1 8-ingress]# kubectl apply -f ingress-nginx-service-modify.yaml

service/ingress-nginx created

[root@node-1 8-ingress]# kubectl get ds -n ingress-nginx

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

nginx-ingress-controller 1 1 1 1 1 app=ingress 46s

[root@node-1 8-ingress]# kubectl get daemonset -n ingress-nginx

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

nginx-ingress-controller 1 1 1 1 1 app=ingress 57s

#发现ingress依然在node-2节点上,没有问题,跟之前的ingress在同一个节点。

[root@node-1 8-ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default-http-backend-6b849d7877-cnzsx 1/1 Running 13 13d 10.200.139.116 node-3 <none> <none>

nginx-ingress-controller-6tx2w 1/1 Running 0 86s 172.16.1.22 node-2 <none> <none>

#看下启动是否正常

[root@node-2 ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

ce4a82bd59943 0eaf7c96a4f12 About a minute ago Running nginx-ingress-controller 0 1273d64da1260

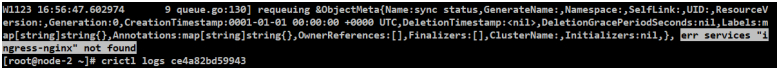

[root@node-2 ~]# crictl logs ce4a82bd59943

#解决方案

[root@node-1 8-ingress]# kubectl apply -f ingress-nginx-service-modify.yaml

service/ingress-nginx created

[root@node-1 8-ingress]# cat ingress-nginx-service-modify.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

labels:

app: ingress-nginx

namespace: ingress-nginx

spec:

type: NodePort

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: 80

#上述错误来源于

[root@node-1 8-ingress]# cat nginx-ingress-controller-modify.yaml

#访问其他地址测试(这里测试ingress没问题):

[root@node-1 configs]# pwd

/root/mooc-k8s-demo/configs

[root@node-1 configs]# kubectl apply -f springboot-web.yaml

[root@node-1 configs]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

springboot-web-demo-7564d56d5f-qfbbm 1/1 Running 0 2m33s

[root@node-1 configs]# kubectl delete -f springboot-web.yaml

deployment.apps "springboot-web-demo" deleted

[root@node-1 configs]# kubectl get pods -n dev

No resources found in dev namespace.

#这里测试把ingress扩展成两个节点。对于daemonset/ds来说,只需要打一个标签。

[root@node-1 8-ingress]# kubectl label node node-3 app=ingress

node/node-3 labeled

#发现node-3并没有启动成功nginx-ingress-controller,因为node-3启动了harbor,harbor-nginx占用了80端口。那么可以说,只要在其他节点上打一个标签,那么就会在其他节点运行一个新实例了,非常的方便。

[root@node-1 8-ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d 10.200.139.125 node-3 <none> <none>

nginx-ingress-controller-n6j64 1/1 Running 1 11h 172.16.1.22 node-2 <none> <none>

nginx-ingress-controller-rsmqx 0/1 CrashLoopBackOff 4 111s 172.16.1.23 node-3 <none> <none>

#删掉标签,发现node-3启动的nginx-ingress-controller失败服务自动停掉

[root@node-1 ~]# kubectl label node node-3 app-

node/node-3 labeled

[root@node-1 ~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d

nginx-ingress-controller-n6j64 1/1 Running 1 11h

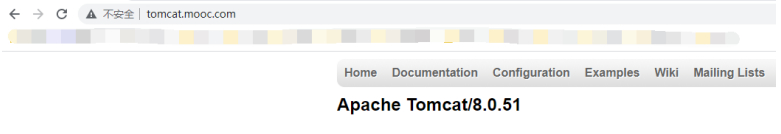

问题2:四层代理/TCP服务用ingress-nginx做服务发现

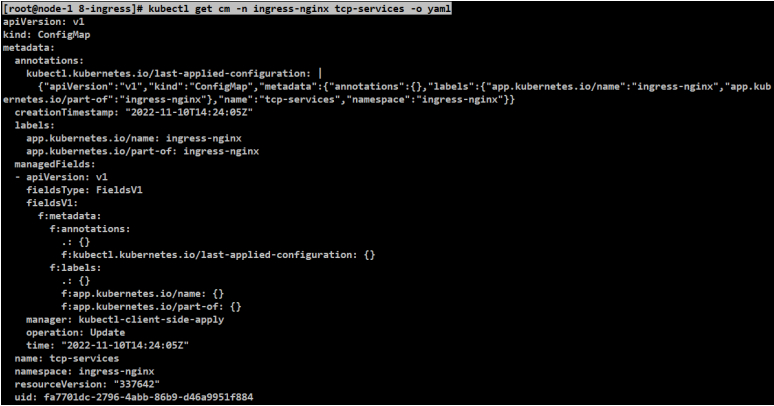

#查询命名空间ingress-nginx下,有几个configmap。

[root@node-1 8-ingress]# kubectl get cm -n ingress-nginx

NAME DATA AGE

ingress-controller-leader-nginx 0 13d

kube-root-ca.crt 1 13d

nginx-configuration 0 13d

#这个tcp-services是在部署ingress-nginx的时候创建的

tcp-services 0 13d

udp-services 0 13d

[root@node-1 8-ingress]# kubectl get cm -n ingress-nginx tcp-services -o yaml

[root@node-1 8-ingress]# cat tcp-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: tcp-services

namespace: ingress-nginx

#可以在data中配置暴露四层代理的服务

data:

#"30000"为要暴露出去的端口

#dev/web-demo:80为要暴露出去的服务,dev命令空间下,web-demo这个service服务,80这个端口。端口80,并不是目标容器的端口。

#这个服务暴露在上述ingress-nginx上,并且对外暴露的端口是30000

"30000": dev/web-demo:80

#在node-1执行服务

[root@node-1 8-ingress]# kubectl apply -f tcp-config.yaml

configmap/tcp-services created

#查询命名空间ingress-nginx下,有几个configmap。

[root@node-1 8-ingress]# kubectl get cm -n ingress-nginx

NAME DATA AGE

ingress-controller-leader-nginx 0 13d

kube-root-ca.crt 1 13d

nginx-configuration 0 13d

tcp-services 1 3m50s

udp-services 0 13d

#在node-1(必须)启动web-demo服务

[root@node-1 configs]# pwd

/root/mooc-k8s-demo/configs

[root@node-1 configs]# kubectl apply -f web.yaml -n dev

deployment.apps/web-demo created

service/web-demo created

[root@node-1 8-ingress]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-demo-8689dbf78d-4d2wl 1/1 Running 0 2m15s 172.16.1.22 node-2 <none> <none>

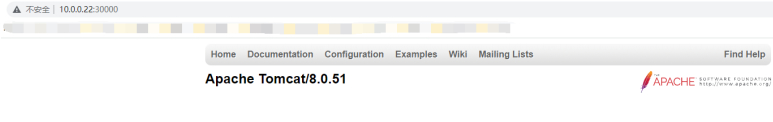

#到ingress-nginx部署的机器上node-2查看30000端口是否开启

[root@node-2 ~]# netstat -lntup|grep 30000

tcp 0 0 0.0.0.0:30000 0.0.0.0:* LISTEN 8006/nginx: master

tcp6 0 0 :::30000 :::* LISTEN 8006/nginx: master

#现在可以通过另外一种方式去访问服务了

#当然这个WEB服务没必要去开这个四层代理服务,但是测试比较方便。

#确认下service的配置,配的就是刚才的service中web-demo这个名字,而不是deployment中的名字。

[root@node-1 8-ingress]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

web-demo ClusterIP 10.233.121.96 <none> 80/TCP 91s

[root@node-1 8-ingress]# kubectl get svc -n dev web-demo -o yaml

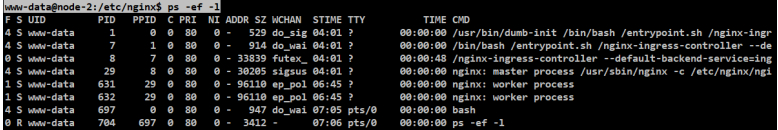

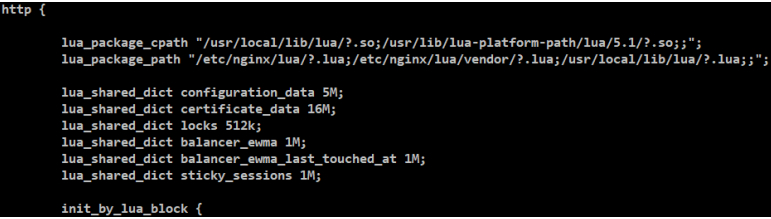

问题3:自定义配置

#看下现有的nginx配置。都是ingress-nginx的默认的配置。

#在所有server内生效,key可能跟nginx中的配置不同,key要到官网去查阅。

[root@node-2 ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

b6c1cd6d15c9c 0eaf7c96a4f12 Less than a second ago Running nginx-ingress-controller 1 ab6229b7fe59b

[root@node-2 ~]# crictl exec -it b6c1cd6d15c9c bash

www-data@node-2:/etc/nginx$ ps -ef -l

www-data@node-2:/etc/nginx$ more /etc/nginx/nginx.conf

在稍微新一些的版本上,都是没有具体的endpoint,早一些的版本,upstream都是从service读过来的endpoint,就是把POD的ip都写到这个配置文件里,那么当endpoint变化时,它每次都要发生一次reload,如果是批量部署的话,那么reload的频率和性能就会严重影响系统的稳定性。

在稍微新一些的版本上,引入了lua的模块,可以动态去实现改变endpoints,而不用去reload nginx了。

#看一下准备的配置文件

[root@node-1 8-ingress]# cat nginx-config.yaml

kind: ConfigMap

apiVersion: v1

metadata:

#下述都是固定值,自定义参数的名字

name: nginx-configuration

namespace: ingress-nginx

labels:

app: ingress-nginx

#data中可以修改,自定义参数的值

#具体支持的key和value去官网找一下

data:

proxy-body-size: "64m"

proxy-read-timeout: "180"

proxy-send-timeout: "180"

“https://github.com/kubernetes/ingress-nginx”

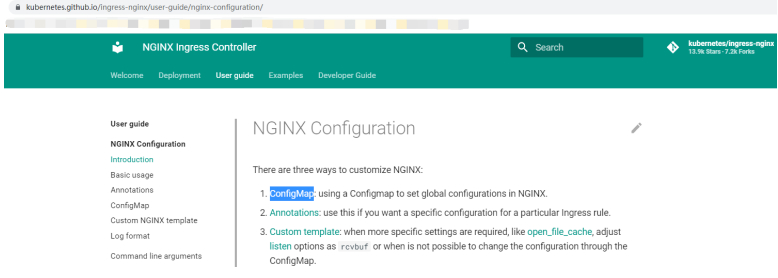

“https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/”

#这个绿颜色的网站是对ingress-nginx详细的说明

#可以使用configmap做ingress-nginx的全局配置

#修改nginx配置

[root@node-1 8-ingress]# kubectl apply -f nginx-config.yaml

configmap/nginx-configuration configured

#再去容器中看下nginx的配置

[root@node-2 ~]# crictl exec -it b6c1cd6d15c9c bash

www-data@node-2:/etc/nginx$ more /etc/nginx/nginx.conf

#核实新加的属性配置

#在所有server内生效,key可能跟nginx中的配置不同,key要到官网去查阅。

client_max_body_size "64m";

proxy_send_timeout 180s;

proxy_read_timeout 180s;

问题4:自定义全局header

#还可能去自定义一些header,比如在全局配置加一些自定义header。

#在所有server内生效,key可能跟nginx中的配置不同,key要到官网去查阅。

[root@node-1 8-ingress]# cat custom-header-global.yaml

#把这个配置文件当做header引入进去

apiVersion: v1

kind: ConfigMap

#具体的key和value可以在data中设置

data:

#在nginx-configuration中加proxy-set-headers

proxy-set-headers: "ingress-nginx/custom-headers"

metadata:

#在ingress-nginx命名空间加custom-headers配置,名字custom-headers对应下面的metadata.name。

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

data:

X-Different-Name: "true"

X-Request-Start: t=${msec}

X-Using-Nginx-Controller: "true"

metadata:

name: custom-headers

namespace: ingress-nginx

#修改nginx配置

[root@node-1 8-ingress]# kubectl apply -f custom-header-global.yaml

configmap/nginx-configuration configured

configmap/custom-headers created

#再去容器中看下nginx的配置

[root@node-2 ~]# crictl exec -it f6beb3ec4b469 bash

www-data@node-2:/etc/nginx$ more /etc/nginx/nginx.conf

#核实新加的属性配置

#在所有server内生效,key可能跟nginx中的配置不同,key要到官网去查阅。

# Custom headers to proxied server

proxy_set_header X-Different-Name "true";

proxy_set_header X-Request-Start "t=${msec}";

proxy_set_header X-Using-Nginx-Controller "true";

问题5:定制某一个ingress下的header

#只在某一个server内生效,key可能跟nginx中的配置不同,key要到官网去查阅。

[root@node-1 8-ingress]# cat custom-header-spec-ingress.yaml

apiVersion: extensions/v1beta1

#类型Ingress

kind: Ingress

metadata:

annotations:

#唯一的区别在annotations中加了configuration-snippet

nginx.ingress.kubernetes.io/configuration-snippet: |

more_set_headers "Request-Id: $req_id";

name: web-demo

namespace: dev

spec:

rules:

- host: web-dev.mooc.com

http:

paths:

- backend:

serviceName: web-demo

servicePort: 80

path: /

#修改nginx配置

[root@node-1 8-ingress]# kubectl apply -f custom-header-spec-ingress.yaml

#再去容器中看下nginx的配置

[root@node-2 ~]# crictl exec -it f6beb3ec4b469 bash

www-data@node-2:/etc/nginx$ more /etc/nginx/nginx.conf

#核实新加的属性配置

#只在”server web-dev.mooc.com”生效,key可能跟nginx中的配置不同,key要到官网去查阅。

more_set_headers "Request-Id: $req_id";

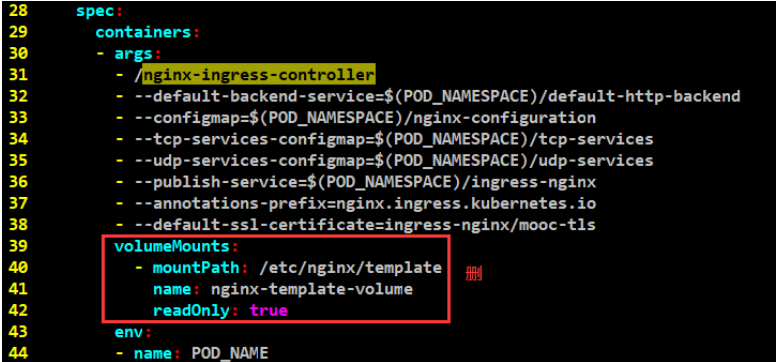

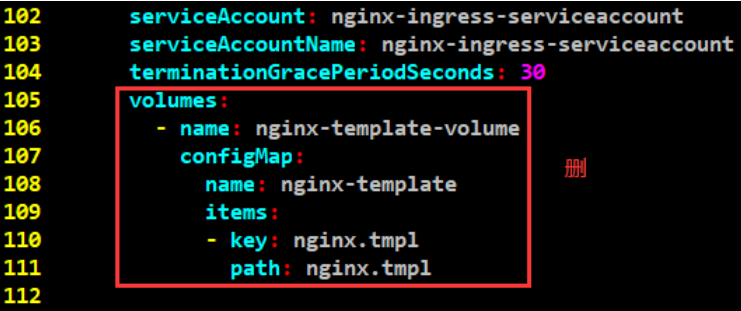

问题6:配置模板(对比10-5/6)

如果上述配置不能满足现有要求,那么可以使用模板。

“https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/custom-template/”

Nginx的配置是从模板文件,通过程序生成的/etc/nginx/template/nginx.tmpl。

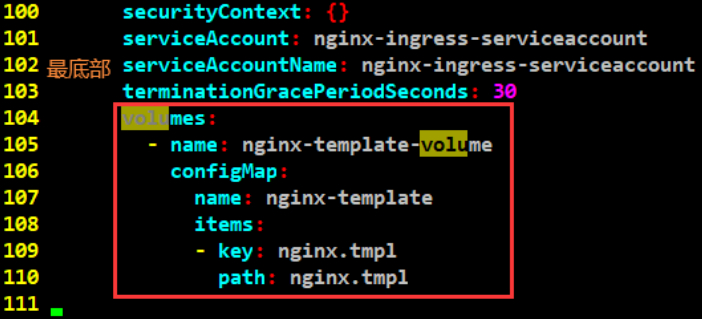

下述是生成的方法,有个挂载的目录。

#复制下来

volumeMounts:

- mountPath: /etc/nginx/template

name: nginx-template-volume

readOnly: true

volumes:

- name: nginx-template-volume

configMap:

name: nginx-template

items:

- key: nginx.tmpl

path: nginx.tmpl

[root@node-1 8-ingress]# cp nginx-ingress-controller-modify.yaml nginx-ingress-controller-modify2.yaml

[root@node-1 8-ingress]# vim nginx-ingress-controller-modify2.yaml

#加入下述两个红框的内容

上述内容,在容器级别加一个挂载。

下述内容,使用的configmap中文件的内容。

#修改后的文件内容

[root@node-1 8-ingress]# cat nginx-ingress-controller-modify2.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

creationTimestamp: null

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

- --default-ssl-certificate=ingress-nginx/mooc-tls

volumeMounts:

- mountPath: /etc/nginx/template

name: nginx-template-volume

readOnly: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.19.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: nginx-ingress-controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

securityContext:

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 33

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

app: ingress

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: nginx-ingress-serviceaccount

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 30

volumes:

- name: nginx-template-volume

configMap:

name: nginx-template

items:

- key: nginx.tmpl

path: nginx.tmpl

#要拿到nginx.tmpl文件才能创建nginx-ingress-controller-modify2.yaml。

[root@node-1 ~]# kubectl cp --help

#把nginx.tmpl放到跟nginx-ingress-controller-modify2.yaml同一目录中。

[root@node-1 8-ingress]# kubectl cp ingress-nginx/nginx-ingress-controller-n6j64:/etc/nginx/template/nginx.tmpl /root/deep-in-kubernetes/8-ingress/nginx.tmpl

tar: Removing leading `/' from member names

(

docker复制容器内的文件命令为:docker cp 容器ID:/容器目录/文件。

这里是containerd和docker的用法不同。

)

[root@node-1 8-ingress]# cat nginx.tmpl

#在apply这个nginx-ingress-controller-modify2.yaml之前要先把configmap配置创建好,创建这个configmap要先拿到/etc/nginx/template/nginx.tmpl文件。这里还是用前面daemonset创建的nginx-controller容器。

[root@node-1 8-ingress]# kubectl create cm nginx-template --from-file /root/deep-in-kubernetes/8-ingress/nginx.tmpl -n ingress-nginx

configmap/nginx-template created

[root@node-1 8-ingress]# kubectl get cm nginx-template -n ingress-nginx

NAME DATA AGE

nginx-template 1 54s

[root@node-1 8-ingress]# kubectl get cm nginx-template -n ingress-nginx -o yaml

#修改配置,nginx-ingress就会重启

[root@node-1 8-ingress]# kubectl apply -f nginx-ingress-controller-modify2.yaml

daemonset.apps/nginx-ingress-controller configured

[root@node-1 8-ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d 10.200.139.125 node-3 <none> <none>

nginx-ingress-controller-8q5rz 1/1 Running 0 17s 172.16.1.22 node-2 <none> <none>

[root@node-2 ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

eae4a469464b2 0eaf7c96a4f12 32 seconds ago Running nginx-ingress-controller 0 6bd302e881bf6

[root@node-2 ~]# crictl logs eae4a469464b2

#尝试去修改配置,看是否可以通过它去实现配置的自定义

[root@node-1 8-ingress]# kubectl edit cm nginx-template -n ingress-nginx

#有两个大括号{{}}的内容是程序去执行的

#其中有变量、语法,变量也有了,需要改动的变量很少,直接拿来上手使用。

这里测试把

types_hash_max_size 2048;

改成

types_hash_max_size 4096;

#进到容器看配置文件

[root@node-2 ~]# crictl exec -it eae4a469464b2 bash

www-data@node-2:/etc/nginx$ more /etc/nginx/template/nginx.tmpl

#查看已被修改的配置内容

types_hash_max_size 4096;

跟”10-5/6修改configmap并监听变化”中的使用方式一样。

原理跟configmap一样,是kubelet负责定期的去检查依赖的配置,依赖的volume的内容去动态的更新。

问题7:tls和https证书的配置

既然使用证书就得有一个证书,但这里使用的都是假域名。

使用假证明,就不可能有合法证书,那么就要生成证书,把生成的证书给其配置到ingress-nginx中。

#如果有证书,那么这步可以跳过

[root@node-1 tls]# cat gen-secret.sh

#!/bin/bash

#调用openssl去生成了mooc.com的证书。输出mooc.key和mooc.crt证书。

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout mooc.key -out mooc.crt -subj "/CN=*.mooc.com/O=*.mooc.com"

#有了key和证书就去创建secret。类型tls。指定名字mooc-tls。指定证书的秘钥文件mooc.key和证书文件mooc.crt。统一在同一个命名空间ingress-nginx下。

kubectl create secret tls mooc-tls --key mooc.key --cert mooc.crt -n ingress-nginx

#证书创建。统一在同一个命名空间ingress-nginx下。

[root@node-1 tls]# /bin/sh gen-secret.sh

Generating a 2048 bit RSA private key

.+++

..............................+++

writing new private key to 'mooc.key'

-----

secret/mooc-tls created

[root@node-1 tls]# kubectl get secret mooc-tls -n ingress-nginx

NAME TYPE DATA AGE

mooc-tls kubernetes.io/tls 2 3m15s

[root@node-1 tls]# kubectl get secret mooc-tls -o yaml -n ingress-nginx

#之所以新启了这个tls这个类型,因为场景用的广泛,所以K8S统一了规范。

#包括tls的名字、名字还是tls.crt,tls.key,本质上还是Secret。

apiVersion: v1

data:

tls.crt: XX

tls.key: YY

kind: Secret

metadata:

creationTimestamp: "2022-11-24T09:32:02Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:tls.crt: {}

f:tls.key: {}

f:type: {}

manager: kubectl-create

operation: Update

time: "2022-11-24T09:32:02Z"

name: mooc-tls

namespace: ingress-nginx

resourceVersion: "1059015"

uid: 2bd98XX6e9d1f7b4c

type: kubernetes.io/tls

#证书创建完,如何让K8S把Secret用起来呢?

[root@node-2 ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

eae4a469464b2 0eaf7c96a4f12 51 minutes ago Running nginx-ingress-controller 0 6bd302e881bf6

[root@node-2 ~]# crictl exec -it eae4a469464b2 bash

www-data@node-2:/etc/nginx$ /nginx-ingress-controller --help

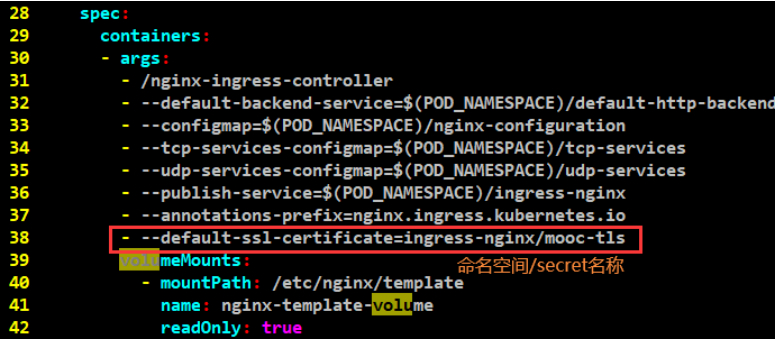

#使用下面行

--default-ssl-certificate string Secret containing a SSL certificate to be used by the default HTTPS server (catch-all).

Takes the form "namespace/name".

#配置证书

[root@node-1 8-ingress]# vim nginx-ingress-controller-modify2.yaml

- --default-ssl-certificate=ingress-nginx/mooc-tls

#指定命名空间下的证书

[root@node-1 8-ingress]# kubectl apply -f nginx-ingress-controller-modify2.yaml

daemonset.apps/nginx-ingress-controller configured

#检查需要测试访问的springboot服务

[root@node-1 tls]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

springboot-web-demo-7564d56d5f-vkh79 1/1 Running 0 5m31s

#在给ingress-nginx配置好了域名之后,还需要在具体的域名下指定这个域名用的哪个证书。

[root@node-1 tls]# cat springboot-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-web-demo

namespace: dev

spec:

rules:

- host: springboot.mooc.com

http:

paths:

- backend:

serviceName: springboot-web-demo

servicePort: 80

path: /

#这里配置了tls

#有了域名、证书,这样才会创建一个”https://web-dev.mooc.com”这样的https的服务。

tls:

- hosts:

- springboot.mooc.com

#mooc-tls是创建secret时创建的名字。

secretName: mooc-tls

[root@node-1 tls]# kubectl apply -f springboot-ingress.yaml

[root@node-1 tls]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

springboot-web-demo-7564d56d5f-vkh79 1/1 Running 0 10m

[root@node-1 8-ingress]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d

nginx-ingress-controller-q2jcq 1/1 Running 0 31s

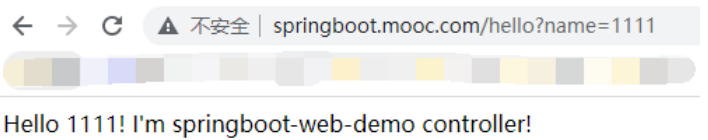

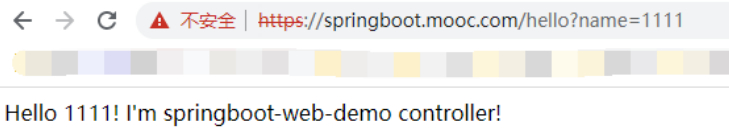

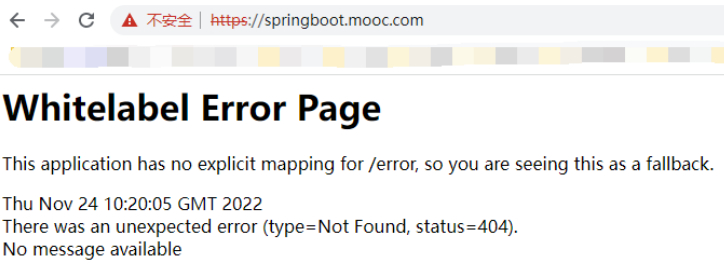

正常访问。这里证书就配置完成了。

如果用的是合法证书,那么地址左边应该是个绿色的。

“https://springboot.mooc.com/hello?name=1111”

测试HTTPS。证书是自己生成的,肯定不安全。

#此时直接访问域名是404,并且自动跳转

”https://springboot.mooc.com/”。

问题8:访问控制--session保持

#另外一个问题,WEB服务可能需要session保持,同一个会话里,最好一直访问的是同一个后端。

#先看下环境,之前的POD是否可以做测试。这里做下测试环境。

#编辑win10的 C:\Windows\System32\drivers\etc\hosts 文件加入。执行ipconfig /flushdns。

10.0.0.22 springboottest.mooc.com

#三个节点也加入

[root@node-1/2/3 nginx]# cat /etc/hosts

10.0.0.22 springboottest.mooc.com

[root@node-1 configs]# pwd

/root/mooc-k8s-demo/configs

[root@node-1 configs]# cat springboot-web-test1.yaml

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: springboot-web-test1

spec:

selector:

matchLabels:

app: springboot-web-test

replicas: 1

template:

metadata:

labels:

app: springboot-web-test

spec:

containers:

- name: springboot-web-test

image: hub.mooc.com/kubernetes/springboot-web:v1

ports:

- containerPort: 8080

---

#service

apiVersion: v1

kind: Service

metadata:

name: springboot-web-test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: springboot-web-test

type: ClusterIP

---

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-web-test

spec:

rules:

- host: springboottest.mooc.com

http:

paths:

- path: /

backend:

serviceName: springboot-web-test

servicePort: 80

[root@node-1 configs]# cat springboot-web-test2.yaml

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: springboot-web-test2

spec:

selector:

matchLabels:

app: springboot-web-test

replicas: 1

template:

metadata:

labels:

app: springboot-web-test

spec:

containers:

- name: springboot-web-test

image: hub.mooc.com/kubernetes/springboot-web2:v1

ports:

- containerPort: 8080

---

#service

apiVersion: v1

kind: Service

metadata:

name: springboot-web-test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: springboot-web-test

type: ClusterIP

---

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-web-test

spec:

rules:

- host: springboottest.mooc.com

http:

paths:

- path: /

backend:

serviceName: springboot-web-test

servicePort: 80

[root@node-1 configs]# kubectl apply -f springboot-web-test1.yaml

[root@node-1 configs]# kubectl apply -f springboot-web-test2.yaml

[root@node-1 configs]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

springboot-web-test1-57c8b88f56-s6mht 1/1 Running 0 8m56s 10.200.139.117 node-3 <none> <none>

springboot-web-test2-9d44f7d9b-75kx4 1/1 Running 0 5m2s 10.200.247.27 node-2 <none> <none>

[root@node-1 tls]# cat springboottest-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-web-test

namespace: dev

spec:

rules:

- host: springboottest.mooc.com

http:

paths:

- backend:

serviceName: springboot-web-test

servicePort: 80

path: /

tls:

- hosts:

- springboottest.mooc.com

secretName: mooc-tls

[root@node-1 tls]# kubectl apply -f springboottest-ingress.yaml

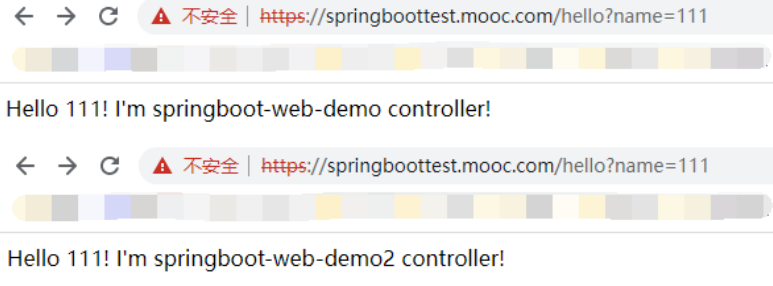

#随机出现两种

#那么如何把session保持住呢

[root@node-1 8-ingress]# cat ingress-session-modify.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

#注意annotations中的内容,实现session保持。

annotations:

#affinity匹配cookie

nginx.ingress.kubernetes.io/affinity: cookie

#cookie哈希算法sha1

nginx.ingress.kubernetes.io/session-cookie-hash: sha1

#cookie名称route

nginx.ingress.kubernetes.io/session-cookie-name: route

name: springboot-web-test

namespace: dev

spec:

rules:

- host: springboottest.mooc.com

http:

paths:

- backend:

serviceName: springboot-web-test

servicePort: 80

path: /

[root@node-1 8-ingress]# kubectl apply -f ingress-session-modify.yaml

#这个ingress改了之后,这个https就不行了,因为没配tls。

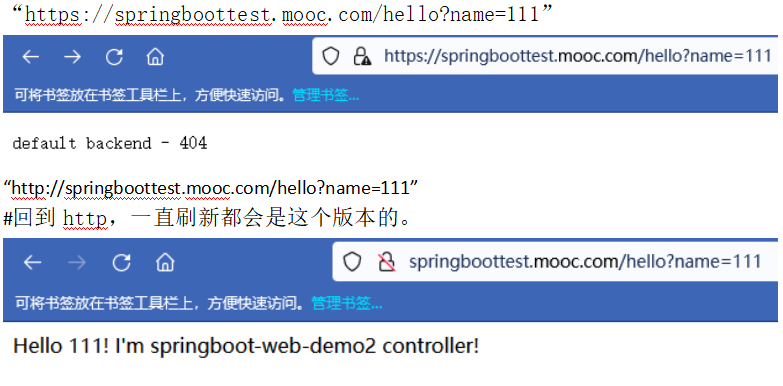

#这里使用火狐浏览器,用Google会自动跳转到https页面(导致一直显示”backend”)。

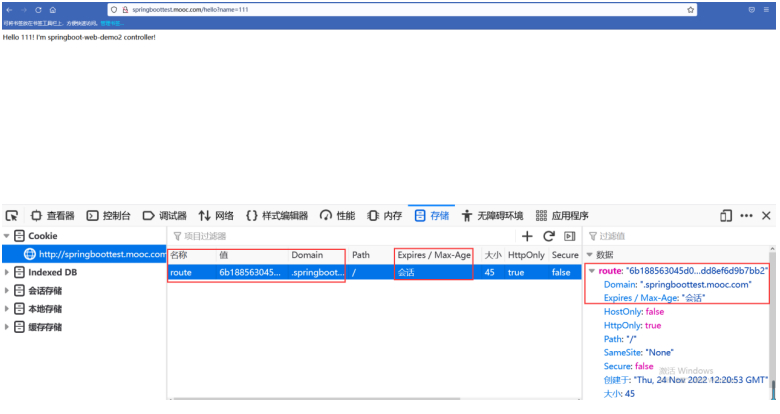

#核实下cookie

#cookie名称是route,过期时间是会话级别的,值是hash的sha1的值。

#带着这个cookie可以实现一直访问到的是同一个后端了。

#除非浏览器关掉重开,就可能访问到另一个服务了。

这样,Session保持就解决了。

问题9:访问控制--小流量切换(金丝雀部署)(对比10-4)

在部署过程中可能遇到小流量问题。

比如服务部署上去有没有问题,先把流量切百分之十过来,看有没有问题。

那么再开50%,100%这么一个过程。

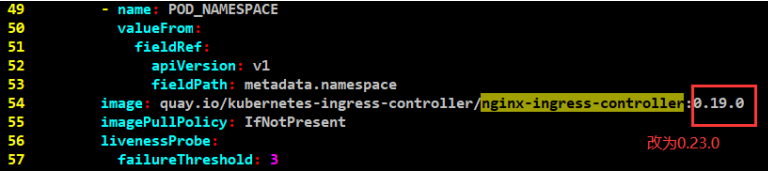

基本配置(升级nginx-ingress-controller版本)

#截止此时,新版本才支持小流量切换,要更新nginx-ingress-controller镜像版本为0.23.0。

[root@node-1 8-ingress]# cp nginx-ingress-controller-modify2.yaml nginx-ingress-controller-modify3.yaml

[root@node-1 8-ingress]# kubectl delete -f nginx-ingress-controller-modify2.yaml

daemonset.apps "nginx-ingress-controller" deleted

#修改后的文件内容

[root@node-1 8-ingress]# cat nginx-ingress-controller-modify3.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

creationTimestamp: null

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

- --default-ssl-certificate=ingress-nginx/mooc-tls

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.23.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: nginx-ingress-controller

ports:

- containerPort: 80

hostPort: 80

name: http

protocol: TCP

- containerPort: 443

hostPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

securityContext:

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 33

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

hostNetwork: true

nodeSelector:

app: ingress

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: nginx-ingress-serviceaccount

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 30

[root@node-1 8-ingress]# kubectl apply -f nginx-ingress-controller-modify3.yaml

daemonset.apps/nginx-ingress-controller created

[root@node-1 8-ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d 10.200.139.125 node-3 <none> <none>

nginx-ingress-controller-cgffj 1/1 Running 0 3m47s 172.16.1.22 node-2 <none> <none>

[root@node-2 ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

d6a1a9fc0269b 98d43aa19f5de 2 minutes ago Running nginx-ingress-controller 0 48a494340b0f6

[root@node-1 ingress]# pwd

/root/deep-in-kubernetes/8-ingress/canary

[root@node-1 8-ingress]# mkdir canary

[root@node-1 8-ingress]# cd canary

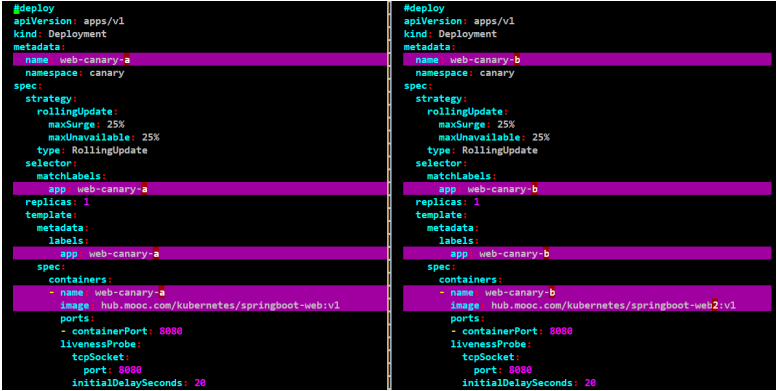

[root@node-1 canary]# cat web-canary-a-modify.yaml

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-canary-a

namespace: canary

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: web-canary-a

replicas: 1

template:

metadata:

labels:

app: web-canary-a

spec:

containers:

- name: web-canary-a

image: hub.mooc.com/kubernetes/springboot-web:v1

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 20

periodSeconds: 10

failureThreshold: 3

successThreshold: 1

timeoutSeconds: 5

readinessProbe:

httpGet:

path: /hello?name=test

port: 8080

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 10

failureThreshold: 1

successThreshold: 1

timeoutSeconds: 5

---

#service

apiVersion: v1

kind: Service

metadata:

name: web-canary-a

namespace: canary

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: web-canary-a

type: ClusterIP

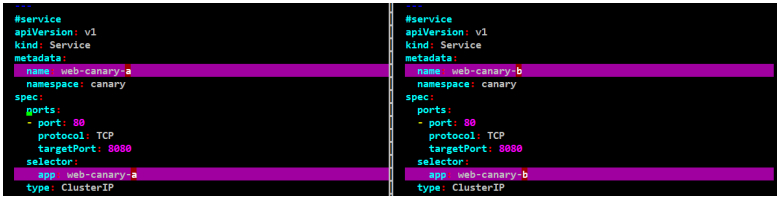

[root@node-1 canary]# cat web-canary-b-modify.yaml

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-canary-b

namespace: canary

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: web-canary-b

replicas: 1

template:

metadata:

labels:

app: web-canary-b

spec:

containers:

- name: web-canary-b

image: hub.mooc.com/kubernetes/springboot-web2:v1

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 20

periodSeconds: 10

failureThreshold: 3

successThreshold: 1

timeoutSeconds: 5

readinessProbe:

httpGet:

path: /hello?name=test

port: 8080

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 10

failureThreshold: 1

successThreshold: 1

timeoutSeconds: 5

---

#service

apiVersion: v1

kind: Service

metadata:

name: web-canary-b

namespace: canary

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: web-canary-b

type: ClusterIP

#做下web-canary-a和web-canary-b的文件对比

#web-canary-a和web-canary-b只有名字和镜像不同,其他都相同

[root@node-1 canary]# vimdiff web-canary-a-modify.yaml web-canary-b-modify.yaml

#创建命名空间

[root@node-1 canary]# kubectl create ns canary

namespace/canary created

#创建a和b两个服务

[root@node-1 canary]# kubectl apply -f web-canary-a-modify.yaml

deployment.apps/web-canary-a created

service/web-canary-a created

[root@node-1 canary]# kubectl apply -f web-canary-b-modify.yaml

deployment.apps/web-canary-b created

service/web-canary-b created

#此时deployment和service都有了,要访问的话,还缺个ingress。

[root@node-1 canary]# cat ingress-common.yaml

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-canary-a

namespace: canary

spec:

rules:

- host: canary.mooc.com

http:

paths:

- path: /

backend:

serviceName: web-canary-a

servicePort: 80

#编辑win10的 C:\Windows\System32\drivers\etc\hosts 文件加入。执行ipconfig /flushdns。

10.0.0.22 canary.mooc.com

#三个节点也加入

[root@node-1/2/3 nginx]# cat /etc/hosts

10.0.0.22 canary.mooc.com

[root@node-1 canary]# kubectl apply -f ingress-common.yaml

ingress.extensions/web-canary-a created

[root@node-1 canary]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

default-http-backend-6b849d7877-cnzsx 1/1 Running 14 13d

nginx-ingress-controller-cgffj 1/1 Running 0 61m

[root@node-3 harbor]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

4f22b992d0522 3cfd99b74d370 7 minutes ago Running web-canary-b 0 afbc6387e1012

67da7df334a8c e5e494a7ee468 7 minutes ago Running web-canary-a 0 426ca918457d9

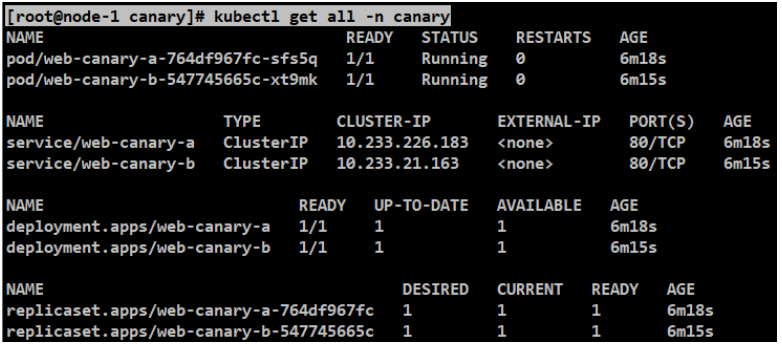

[root@node-1 canary]# kubectl get all -n canary

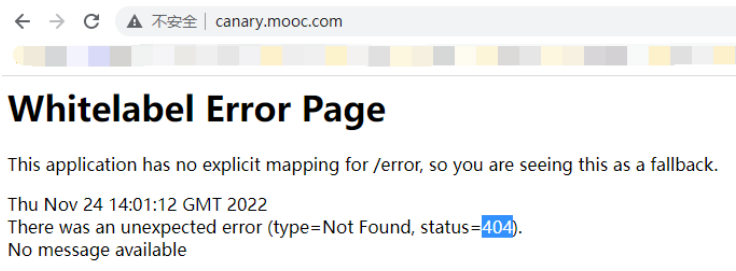

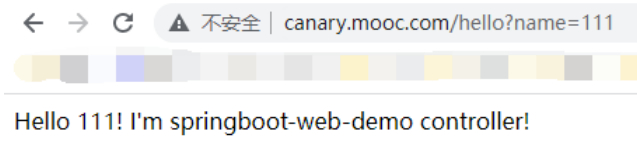

#访问”http://canary.mooc.com/”404没问题

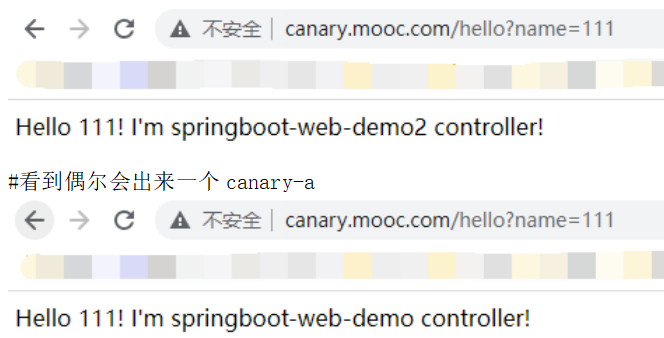

#访问“http://canary.mooc.com/hello?name=111”

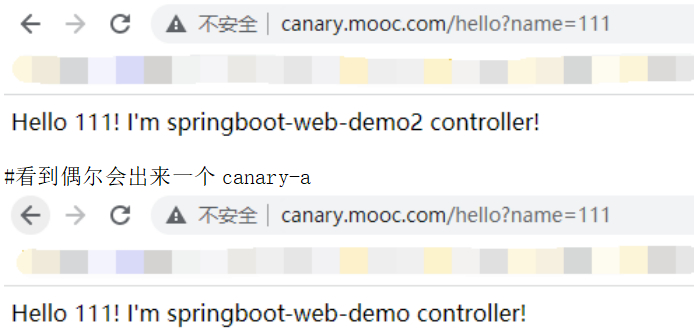

小流量百分比切换 : canary-weight

========================这时要去上线一个canary-b

#这里可以把canary-b当做canary-a的一个升级版

[root@node-1 canary]# cat ingress-weight.yaml

#通过小流量百分比切换的方式去做一个定向的流量控制。

#会把之前的ingress-common.yaml覆盖掉

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

#跟web-canary-a名称不同

name: web-canary-b

namespace: canary

#重点

annotations:

nginx.ingress.kubernetes.io/canary: "true"

#多少的流程会转发到web-canary-b,可以先改成10%的转发这个新服务来。

nginx.ingress.kubernetes.io/canary-weight: "90"

spec:

rules:

#跟web-canary-a是同一个域名,理解成跟web-canary-a是同一个服务。

- host: canary.mooc.com

http:

paths:

- path: /

backend:

#跟web-canary-a服务名不同

serviceName: web-canary-b

servicePort: 80

[root@node-1 canary]# kubectl apply -f ingress-weight.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/web-canary-b created

#更直观一点,可以写个循环测试一下

[root@node-1 canary]# while sleep 0.2;do curl http://canary.mooc.com/hello?name=111 && echo "";done

#这就是一个比较精确的流量控制,但是毕竟会给生产的流量过来。

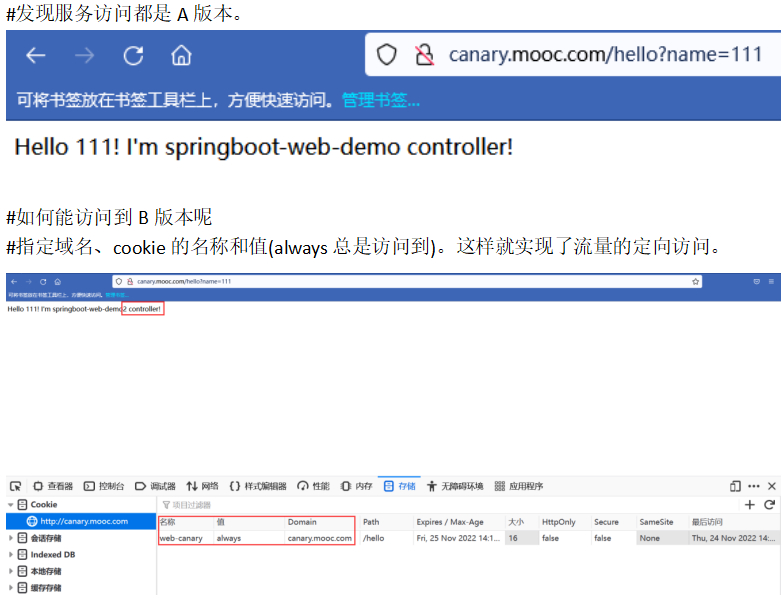

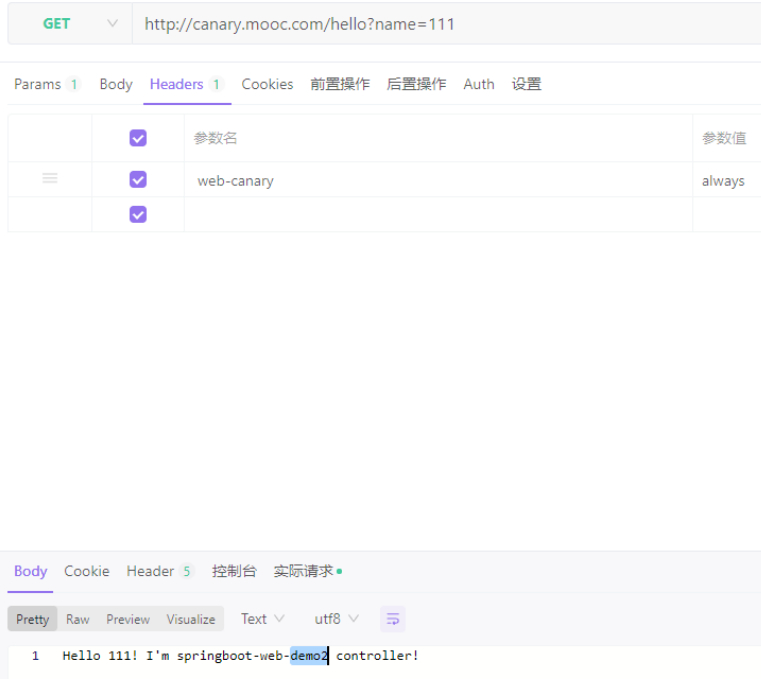

小流量定向切换:canary-by-cookie

========================这时并不让线上的用户去访问,让测试人员去测试是否有问题,测试成功之后,再走这个线上流程。

[root@node-1 canary]# cat ingress-cookie.yaml

#通过cookie的方式去做一个定向的流量控制。

#服务名称还是web-canary-b

#会把之前的ingress-weight.yaml覆盖掉

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-canary-b

namespace: canary

#重点

annotations:

nginx.ingress.kubernetes.io/canary: "true"

#设置cookie名字

nginx.ingress.kubernetes.io/canary-by-cookie: "web-canary"

spec:

rules:

- host: canary.mooc.com

http:

paths:

- path: /

backend:

serviceName: web-canary-b

servicePort: 80

[root@node-1 canary]# kubectl apply -f ingress-cookie.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/web-canary-b configured

[root@node-1 canary]# kubectl get pods -n canary

NAME READY STATUS RESTARTS AGE

web-canary-a-764df967fc-sfs5q 1/1 Running 0 24m

web-canary-b-547745665c-xt9mk 1/1 Running 0 24m

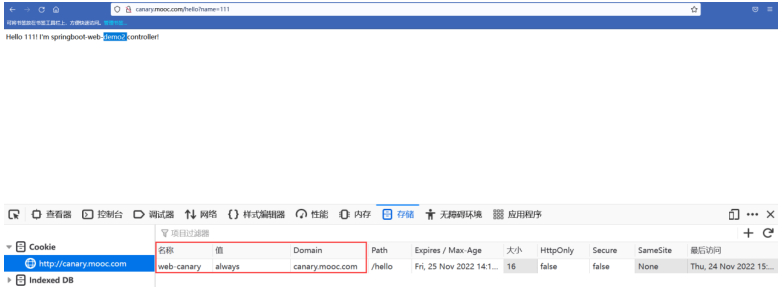

上述手工加cookie是测试场景,还有真实的场景。

比如先针对女性用户做了一部分优化,想先试试一部分女性用户的真实反馈是怎样的?不想让男性用户访问到。

比如可以在用户登录之后,做一个用户性别的判断。(不重启如何解决?)

如果是女性,那么浏览器就返回一个cookie,给写进去web-canary=always,之后这个用户再访问时,就会访问到专门给她准备的页面了,就实现了对用户的精准控制。

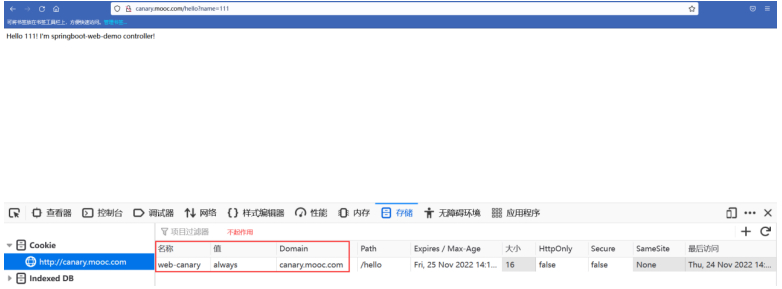

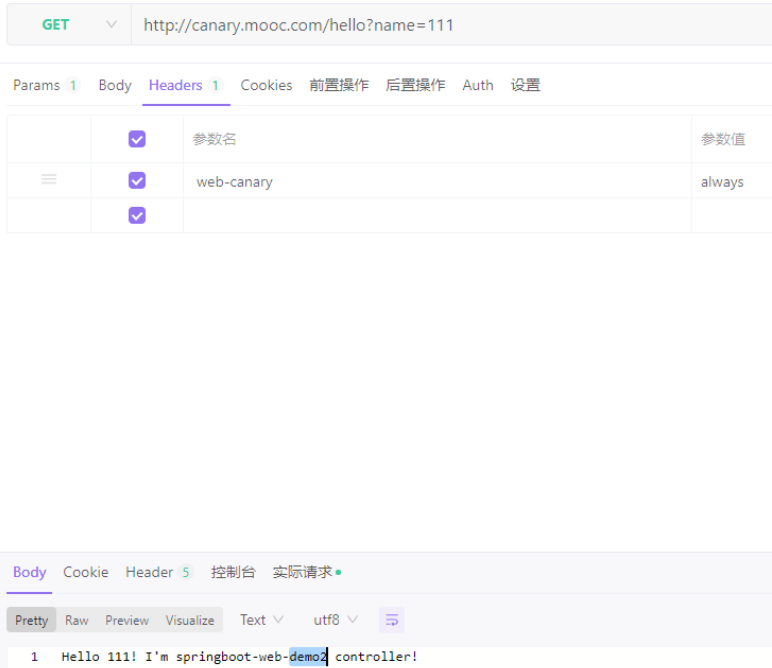

小流量定向切换:canary-by-header

================除了cookie之外,还有一个常见的方式,是使用header。

[root@node-1 canary]# cat ingress-header.yaml

#通过header的方式去做一个定向的流量控制。

#会把之前的ingress-cookie.yaml覆盖掉

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-canary-b

namespace: canary

#重点

annotations:

nginx.ingress.kubernetes.io/canary: "true"

#指定header名字

nginx.ingress.kubernetes.io/canary-by-header: "web-canary"

spec:

rules:

- host: canary.mooc.com

http:

paths:

- path: /

backend:

serviceName: web-canary-b

servicePort: 80

[root@node-1 canary]# kubectl apply -f ingress-header.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/web-canary-b configured

[root@node-1 canary]# kubectl get pods -n canary

NAME READY STATUS RESTARTS AGE

web-canary-a-764df967fc-sfs5q 1/1 Running 0 34m

web-canary-b-547745665c-xt9mk 1/1 Running 0 34m

#发现上次ingress-cookie.yaml设置cookie的方式已经不生效了,访问的是A服务

#做header的测试:指定header名称和值为(web-canary: always),这样返回的一直是B服务版本的返回值。

#用curl测试比较方便。

[root@node-1 canary]# curl -H 'web-canary: always' http://canary.mooc.com/hello?name=111

小流量切换:header/cookie/weight组合的方式

[root@node-1 canary]# cat ingress-compose.yaml

#通过header、cookie与weight三者组合的方式去做一个定向的流量控制。

#会把之前的ingress-header.yaml覆盖掉

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

#跟web-canary-a名称不同

name: web-canary-b

namespace: canary

#重点

annotations:

nginx.ingress.kubernetes.io/canary: "true"

#下述三者有优先级,并不会冲突。

#优先级1:header。有header,那么优先级结果就根据header来。

#指定header名字

nginx.ingress.kubernetes.io/canary-by-header: "web-canary"

#优先级2:cookie。没有header,那么优先级结果就根据cookie来。

#设置cookie名字

nginx.ingress.kubernetes.io/canary-by-cookie: "web-canary"

#优先级3:weight。没有header,没有cookie,那么优先级结果就根据weight来。

#多少的流程会转发到web-canary-b这个新服务。

nginx.ingress.kubernetes.io/canary-weight: "90"

spec:

rules:

#跟web-canary-a是同一个域名,理解成跟web-canary-a是同一个服务。

- host: canary.mooc.com

http:

paths:

- path: /

backend:

serviceName: web-canary-b

servicePort: 80

[root@node-1 canary]# kubectl apply -f ingress-compose.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/web-canary-b configured

[root@node-1 canary]# kubectl get pods -n canary

NAME READY STATUS RESTARTS AGE

web-canary-a-764df967fc-sfs5q 1/1 Running 0 89m

web-canary-b-547745665c-xt9mk 1/1 Running 0 89m

#优先级1:有header。返回B服务返回值。

#优先级2:有cookie,值为always,无header。返回B服务返回值。

#优先级2:有cookie,值为never,无header。返回A服务返回值。

#优先级3:有weight,无cookie,无header。按weight比例返回A服务或B服务的返回值。

标题:Kubernetes(十一)落地实践(11.1/2/3)ingress -- 四层代理、session保持、定制配置、流量控制(上/中/下)

作者:yazong

地址:https://blog.llyweb.com/articles/2022/11/25/1669305969821.html